Luma’s new Ray-2 AI text to video model is here, and it’s a significant upgrade over its older versions. But is it the best AI video generator out there?

How does it stack up against other platforms like Kling AI, which is considered a gold standard in AI video generation?

In this article, I’ll walk you through what the Ray-2 model is capable of, what’s new, and how it compares to Kling AI. There’s a lot to like about the new Luma model.

What is Luma’s Ray-2 Video Generator?

Luma’s Ray-2 Video Generator is an AI-powered tool that creates videos from text prompts. It supports up to 720p resolution and generates 5- or 10-second clips with dynamic camera movements and cinematic effects.

While it excels in facial expressions and prompt understanding, it struggles with full-body physics, consistency, and sharp details. Currently, it requires a subscription and is in early release, with plans for future updates like image-to-video features.

How to Access and Use Luma’s Ray-2 AI Video Model?

Step 1: Accessing Luma AI Ray 2

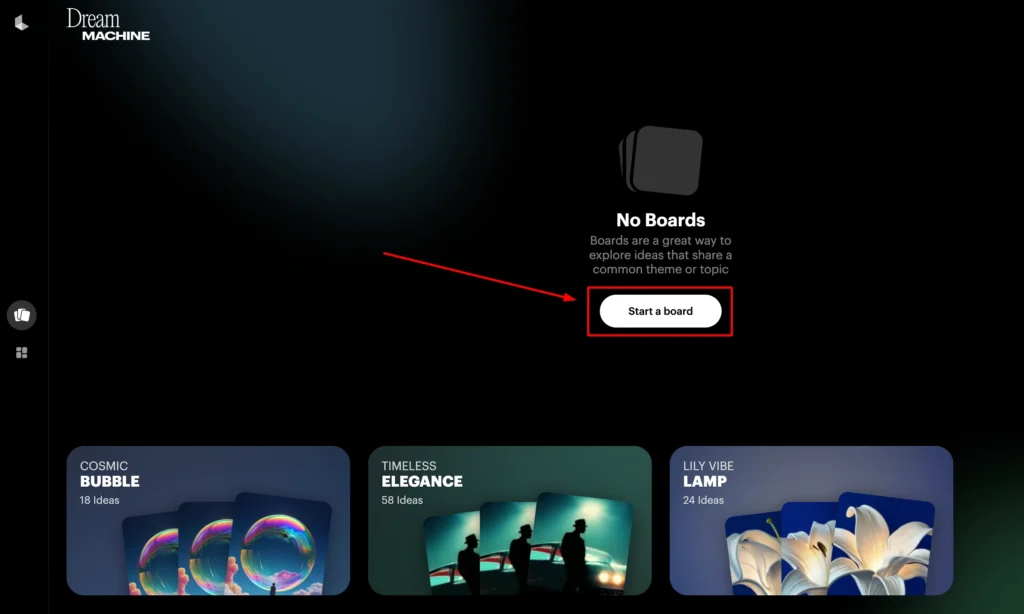

To get started with the new Ray 2 model, you’ll need to visit the Lumalabs AI website. Once you’re on the site, follow these steps:

- Click on “Try Now.”

- This will redirect you to the Dream Machine interface.

- Click on “Start a Board.”

Now, you’ll need to adjust the settings to use the Ray 2 model.

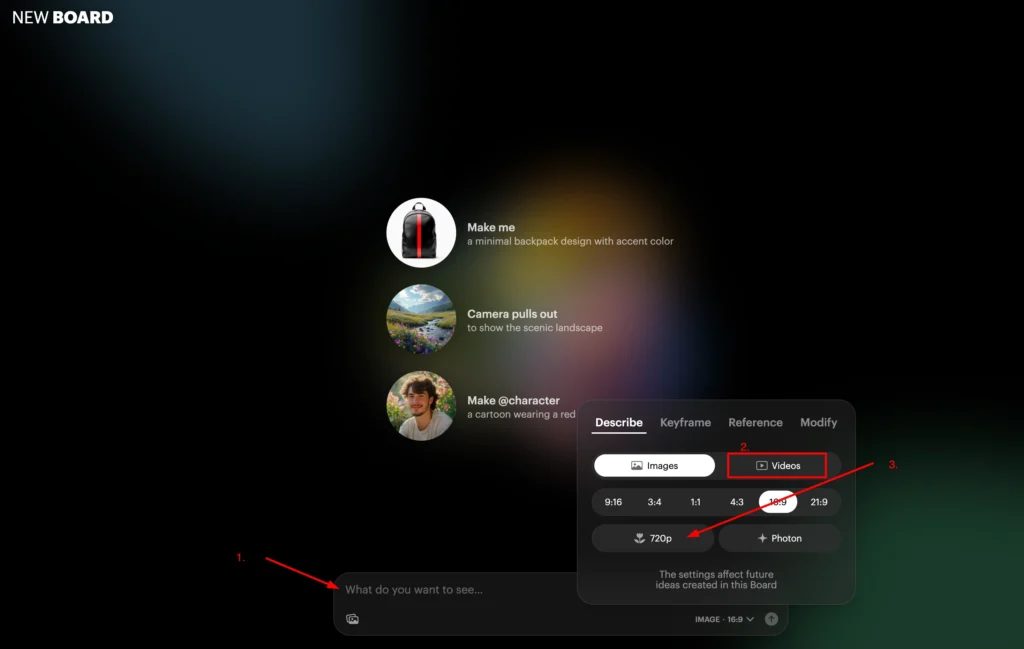

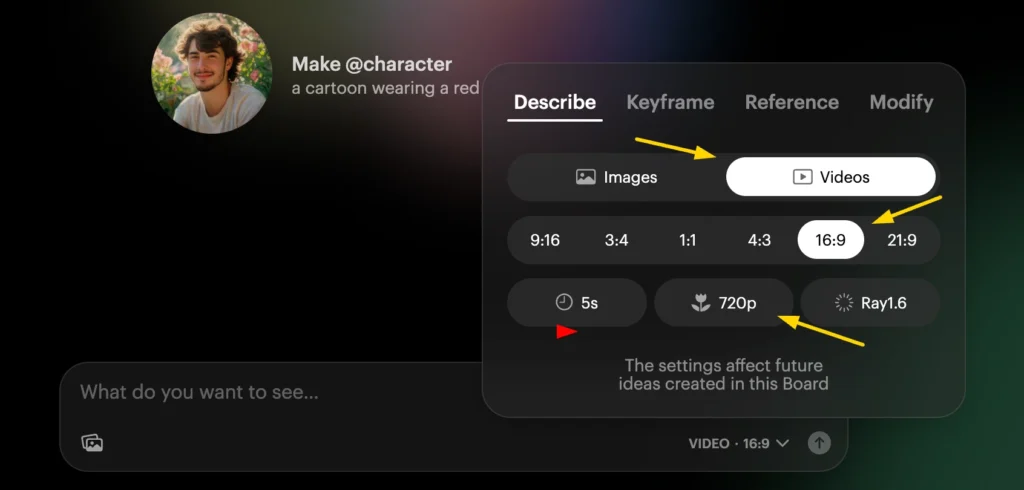

Step 2: Setting Up Ray 2

- In the settings, navigate to the “Videos” section.

- Here, you’ll see the supported aspect ratios.

- You’ll need to manually change the video model to Ray 2.

Currently, Ray 2 supports videos up to 720P resolution and offers two duration options: 5 seconds or 10 seconds per video.

Once you’ve selected the model and settings, you can start creating your own videos by typing in your prompt.

Step 3: Subscription Requirements

One important thing to note is that the Ray 2 model requires a subscription.

- Basic Plan: $10/month

- Pro Plan: $30/month

- Enterprise Plan: Custom pricing

While the subscription might seem a bit pricey, it’s worth testing the model to see if it meets your needs.

Testing Luma AI Ray 2: My Experience

To give you a better idea of Ray 2’s capabilities, I ran several tests. Here’s a detailed review of my experience:

Test 1: A Girl Walking on the Street

For my first test, I prompted Ray 2 to generate a video of “a girl walking on the street.”

Here’s what I observed:

- The generated video looked blurry.

- There were noticeable screen distortions.

- Ray 2 created two videos, but one of them failed during generation.

Overall, the result was underwhelming, especially considering the subscription cost.

Test 2: Physics and Simulation Scenes

Next, I decided to provide more detailed prompts to see if Ray 2 could handle complex visuals. I asked it to create physics and simulation scenes.

Here’s what happened:

- The video had some stretching issues.

- However, the visual effects were cool and vibrant.

While the output wasn’t perfect, it showed potential for creative and abstract visuals.

Test 3: Photorealism

I wanted to test Ray 2’s ability to handle physical interactions, so I prompted it to generate a scene of “close up of nail polish.”

Here’s my take:

- The video started off looking good.

- However, as it progressed, there were noticeable illusions and inconsistencies.

This test highlighted that Ray 2 still has room for improvement when it comes to simulating realistic physical interactions.

While the concept was exciting, the execution fell short of my expectations.

Challenges and Limitations

During my testing, I encountered a few challenges:

- Long Processing Times: Some videos took up to 12 hours to process, and a few were still stuck on “dreaming” even after waiting overnight.

- Server Traffic: It’s possible that the server was experiencing high traffic, which could explain the delays and processing issues.

- Price vs. Performance: Compared to other AI tools like Kling AI or Hailuo AI, Ray 2 didn’t impress me as much, especially given its subscription cost.

Observations

Camera Motion: Ray-2 generates a lot of camera motion and maintains consistency relatively well.

Prompt Understanding: It effectively understands the prompt, showcasing the vintage car, snow, sunlight, and dynamic camera movements to track the car.

Coherence: The entire video stays coherent, which is impressive for AI-generated content.

Ray-2 Strengths and Weaknesses

Strengths

1. Facial Expressions:

Ray-2 excels in generating close-up shots of people, especially facial expressions. For instance, I asked for an extreme expression of sadness, and the raw emotions on the face were vividly captured.

2. Background Consistency:

Even with rapid movements, the background remains consistent. For example, in a scene where a man walks forward quickly, the wet texture on his hat and the rain effects were well-rendered.

3. Cinematic Feel:

Many videos have a cinematic quality, such as a samurai walking in the ocean with a burning ship in the background.

Weaknesses

1. Full-Body Physics:

The model struggles with full-body physics, especially during quick movements. For example, a running character’s form starts to deform unnaturally.

2. Unstable Body Parts:

Body parts often deform or blur. In one scene, a character’s arms became blurry, and another character’s walking motion looked unnatural, with their lower body twisting oddly.

3. Gliding Effect:

Some characters appear to glide on the ground instead of walking naturally.

Comparison with Kling 1.6

Prompt Following

Luma Ray-2: Better at following prompts for dynamic actions. For example, when I asked for buildings to collapse in the background, Ray-2 delivered.

Kling 1.6: More restrictive in generating big movements but excels in maintaining coherency and sharp details.

Visual Quality

Luma Ray-2: Generates more movement and cinematic shots but lacks sharpness in details. For instance, a dodo bird in a Ray-2 video twists and turns dynamically but looks clearly CGI.

Kling 1.6: Produces sharper details and maintains a consistent visual style. In the same dodo bird example, Kling’s bird and human characters look more cohesive.

Resolution, Video Length, and Future Updates

Current Capabilities

- Resolution: Ray-2 generates videos at 720p.

- Video Length: You can create 5-second or up to 10-second videos. However, in 10-second videos, the scene often starts to warp around the 6-7 second mark, losing consistency.

Future Updates

- Early Release: Currently, Ray-2 is in early release and only available to paid users.

- Upcoming Features: Luma Labs plans to release image-to-video and other features soon, which could further enhance the model’s capabilities.

Final Thoughts

Luma’s Ray-2 AI video model is a improvement over its predecessors, particularly in generating dynamic camera movements and cinematic shots. It excels in understanding prompts and creating emotionally expressive close-ups. However, it still struggles with full-body physics, stability, and maintaining sharp details compared to Kling 1.6.

While Ray-2 is more adventurous in following prompts, Kling 1.6 offers better coherency and visual sharpness. As Ray-2 is still in early release, it’s worth keeping an eye on future updates, especially the upcoming image-to-video feature.